Cosmological Simulations

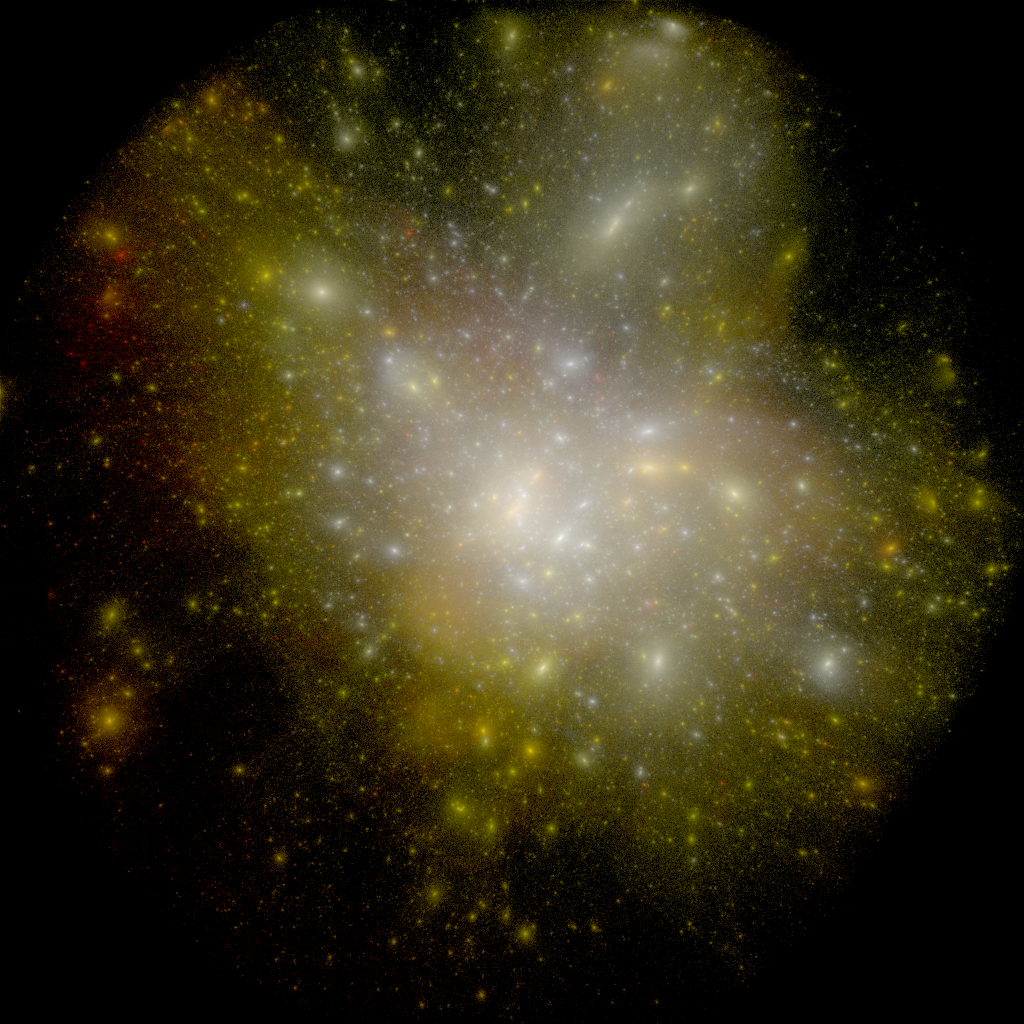

Cosmological simulations are primarily defined by their volume and number of computational elements that discretize the mass in the Universe. Once chosen, each simulation is evolved from very small fluctuations in an otherwise uniform distribution using gravitational N-body integrators in an expanding background Universe. Over the 14 billion years of evolution, these particles cluster into gravitationally bound structures that pull in baryonic matter that form stars, galaxies, and clusters of galaxies. All of the datasets here are derived from the Dark Sky Simulations, which was awarded a DOE INCITE computing allocation at the level of 80M cpu-hours. The largest simulations run from this project cover nearly 12 Gigaparsecs on a side (38 billion light-years across), and uses 1.1 trillion particles to discretize the volume, totalling nearly half a Petabyte of output. This year’s contest will have access to both the very large-scale data releases from the Dark Sky Simulations project, as well as smaller volumes and particle counts to develop the visualization methods and user interface.

Data Types

There are three primary types of data that will be utilized in this years contest. The first is the raw particle data that is described by a position vector, velocity vector, and unique particle identifier. Each snapshot in time, for which there will be approximately 100, is stored in a single file in a format called SDF (https://bitbucket.org/JohnSalmon/sdf). This format is composed of a human readable ASCII header followed by raw binary data. Python and C-based interfaces to the data format will be provided. The second type of dataset is called a Halo Catalog, and it defines a database that groups sets of gravitationally bound particles together into coherent structures. Along with information about a given halo’s position, shape, and size, are a number of statistics derived from the particle distribution, such as angular momentum, relative concentration of the particles, and many more. These catalogs are stored in both ASCII and binary formats. The final dataset type links the individual halo catalogs that each represent a snapshot in time, thereby creating a Merger Tree database. These merger tree datasets form a sparse graph that can then be analyzed to use quantities such as halo mass accretion and merger history to inform how galaxies form and evolve through cosmic time. Merger tree databases are also distributed in both ASCII and BINARY formats.

Get the Data

The data can be downloaded at either http://darksky.slac.stanford.edu/scivis2015/data or from the SDSC cloud https://cloud.sdsc.edu/v1/AUTH_sciviscontest/2015/. In addition to the data provided here, teams may choose to apply their visualization techniques to the broader DarkSky simulations data release, which you can read about at http://darksky.slac.stanford.edu/edr.html. We will be updating the available datasets in early 2015, but the formats are expected to be the same.

Data Description

For an initial description of the data formats, please see http://darksky.slac.stanford.edu/data_release/ and https://bitbucket.org/darkskysims/sdf.

For examples reading SDF data in Fortran, C, or with the libSDF library, see http://bitbucket.org/darkskysims/sdf_example.

If you like python, you may want to check out yt, which has built-in readers for the SDF data format. The I/O routines that are in yt have also been packaged into https://pypi.python.org/pypi/sdfpy, which can be installed with `pip install sdfpy`.